In this latest stage of my RAPTURE journey, I’ve taken a significant step forward – moving from cloud-based workflows to fully local, real-time rendering powered by an RTX 5090 GPU laptop. This shift transforms both the speed and nature of interaction. Where previously there was a noticeable delay between gesture and AI response, now the experience is fluid, performative, and immediate.

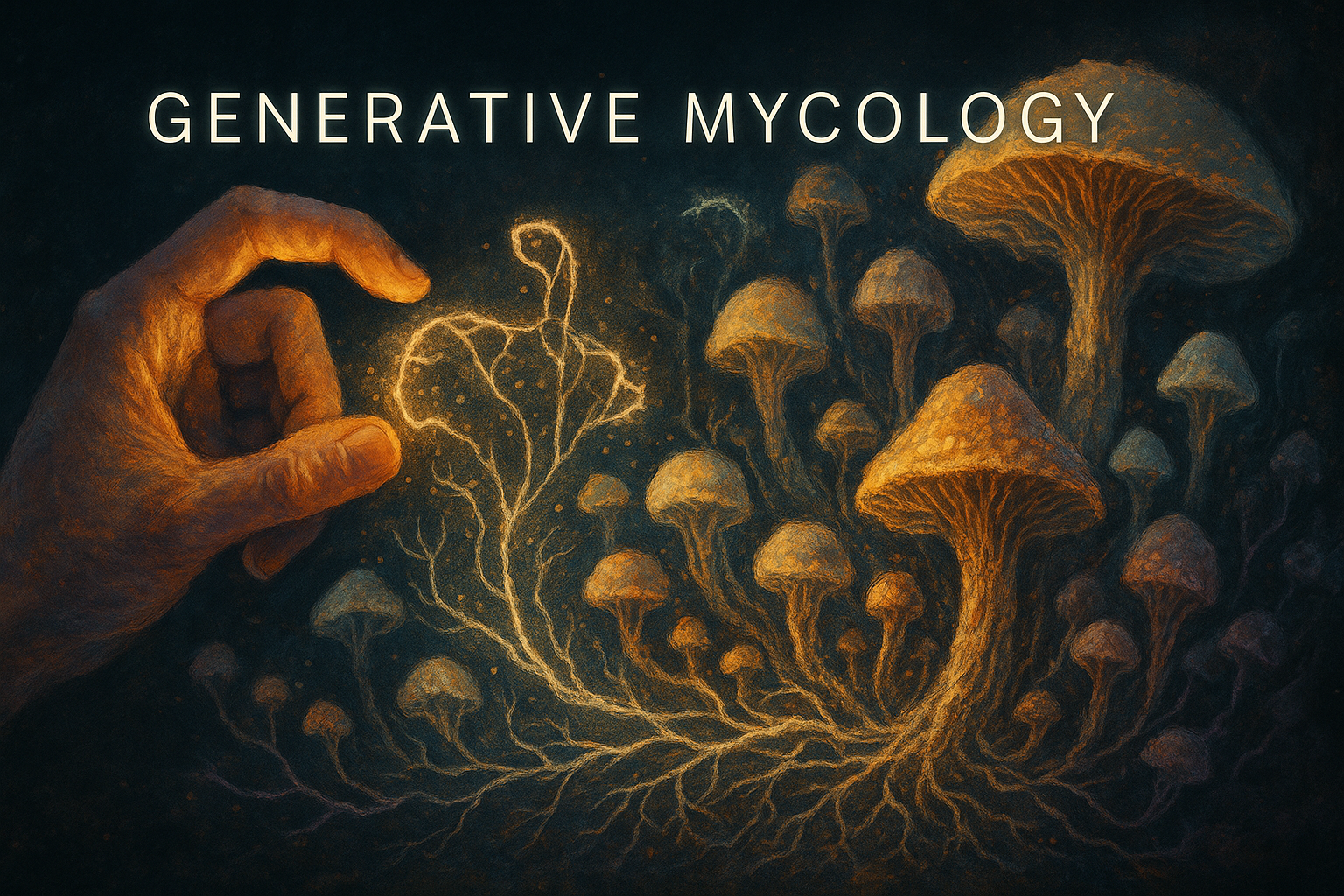

At the heart of this new experiment is a gesture-based interface I built using Python and MediaPipe. It uses well-established techniques to detect hand movements, allowing users to select colours, tools, and draw shapes through intuitive pinching and pointing gestures. These shapes are then streamed into the AI engine in real time, triggering generative responses based on fungal and mycelial prompts.

Painting with Gesture

The interface feels natural – pinching fingers to select drawing tools and colours, then drawing directly in the air. Simple sketches become inputs for the AI, which responds instantly with evolving, organic forms. It’s a direct, performative way of interacting with generative systems, where the artist’s gestures shape the outcome on the fly.

Here’s a short video demo of Generative Mycology in action:

Above: Gesture-based drawing transformed into AI-generated fungal visuals in real time.

From Lag to Live

In earlier iterations, this kind of setup was constrained by the cloud – latency, dropped frames, and streaming delays all created friction between input and output. Now, running everything locally with SDXL Turbo on the 5090 GPU, the responsiveness has improved dramatically.

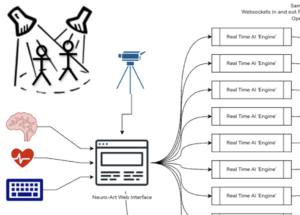

The system includes:

- A gesture-based interface built with Python and MediaPipe

- WebSocket-based communication to stream images and results

- A ComfyUI workflow running SDXL Turbo

- Local AI inference and rendering, eliminating the need for cloud processing

A Living Interface

Why fungus? Mycelium networks are powerful metaphors for connection, emergence, and resilience – ideal subjects for generative art. In Generative Mycology, the artist doesn’t simply prompt an AI but enters into a live feedback loop with it. Gestures act as seeds. The AI interprets and cultivates them into branching, living visuals.

The results feel alive – textures that spore, filaments that creep and connect, pulses of colour that bloom in response to the drawn forms.

What’s Next

This is just the beginning of more embodied and interactive explorations in real-time AI-driven art. With the technical groundwork in place, I’m excited to explore new creative modes – live drawing performances, audience-responsive installations, and other experiences that unfold in the moment.

Stay tuned for more fungi, more fluidity, and more RAPTURE.

Posted: April 30, 2025 by David Oxley

Written by

David Oxley

You may also be interested in:

EXPOS3D: The Art of the System

Building Trevor Jones’ Digital Revolution The core of EXPOS3D was always clear: surveillance, data capture, and participation under observation. The exhibition invited visitors into a space where technology not only

Algorithmic art - tactile prints

I recently saw some fantastic examples of 3D printing on fabric for the fashion industry, and reached out to see if my artwork, Apollonian Mandelbrot, could be realized in this way. I'm thrilled with the result.

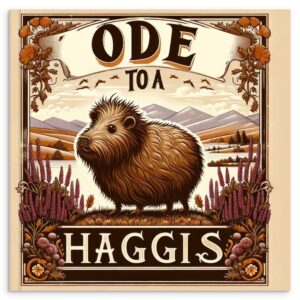

Ode To a Haggis

My book, Ode To A Haggis, began as a fun project inspired by a series of AI-generated images. I started creating these pictures, imagining haggis as if they were real creatures roaming the Scottish Highlands. The images were a hit, and it wasn’t long before a friend asked if I could compile them into a book for her.

Island of Wonders

Island of Wonders is my contribution to the 'On An Island' collection, as part of 23ArtCollective, and it has found its home on objkt.com. This project is a mix of imagination, art, and storytelling, blending elements of wonder and curiosity into a unique digital collectible experience.Dante's Pixel Inferno

In a recent project, I had the incredible opportunity to collaborate with Trevor Jones, a renowned artist blending traditional and digital art forms. Together, we developed "Dante's Pixel Inferno", an exclusive CryptoAngels video game that brings his unique work to life in an interactive way.

Mirror, mirror...

In the world of art and technology, reflections take on new dimensions – both literal and metaphorical. For my submission to the Transient Labs’ Open Call for the Self-Reflection exhibit,

NFTcc Rome

Neuro-Art with BrainSigns and Myndek at Videocittà Festival I had the privilege of showcasing my real-time Neuro-Art system at the Videocittà festival in Rome, a vibrant celebration of digital and

RAPTURE project

RAPTURE, short for Real-time Ai Performance Transformation and User-Responsive Experience, is an extension of my Neuro-Art system that emerged after the Naples NFTcc event. While the original system was driven