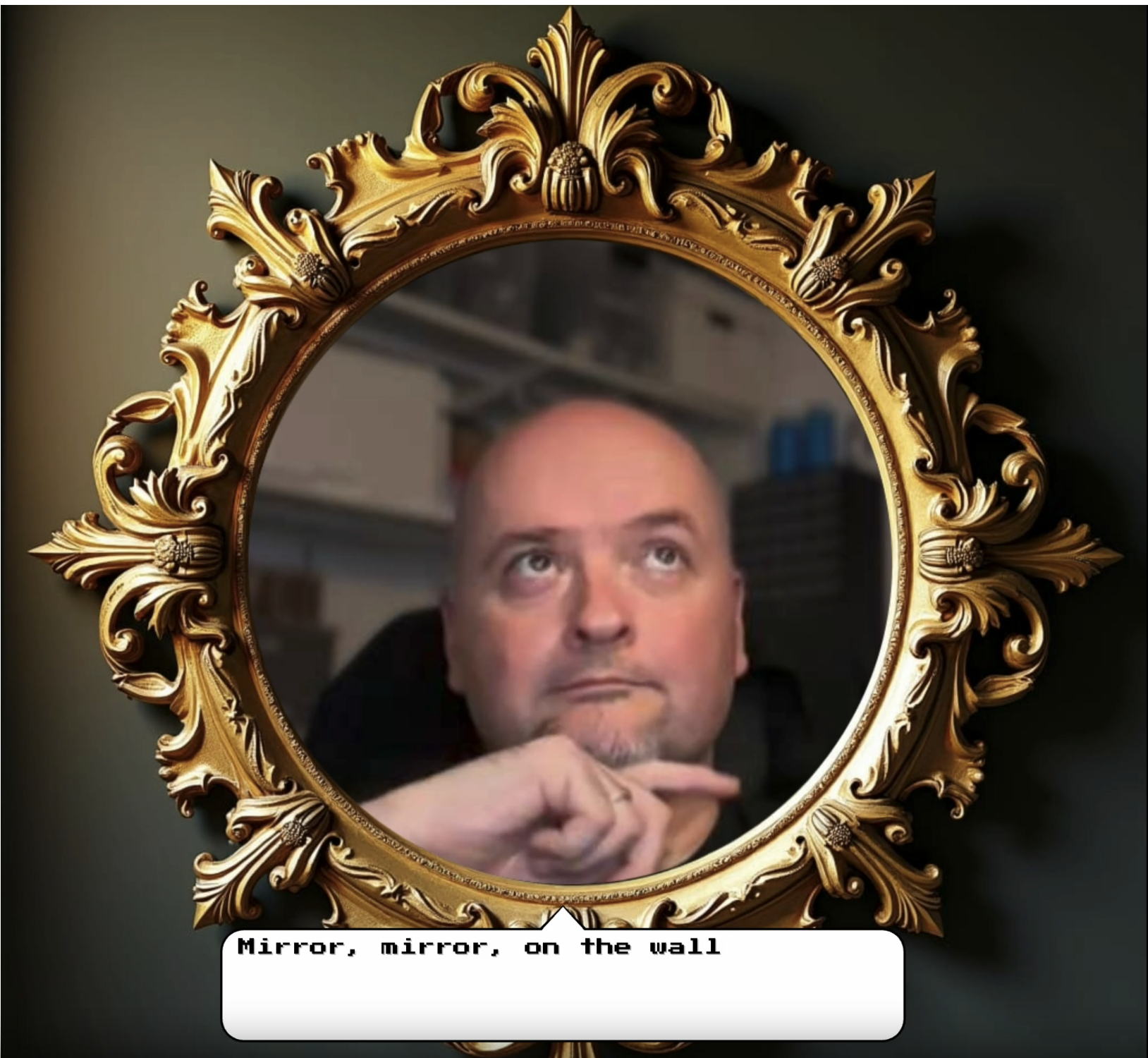

In the world of art and technology, reflections take on new dimensions – both literal and metaphorical. For my submission to the Transient Labs’ Open Call for the Self-Reflection exhibit, I chose to explore the intersection of performance and real-time interaction with AI, with my RAPTURE engine. What emerged was Mirror, Mirror – a recorded session featuring myself interacting with a magic mirror powered by live AI processing.

This was a piece of digital art in the form of a performance, a dialogue, and a journey of self-exploration. Through dynamic prompts and responses, the AI became a partner in the creation of a reflective narrative, unveiling the ways in which we perceive and define ourselves in real-time.

Selected for the Canvas 3.0 Gallery

Mirror, Mirror was selected as one of the 16 featured artworks for the Self-Reflection exhibit at the Canvas 3.0 Gallery in the Oculus World Trade Center, NYC. This exhibit was a celebration of introspection, displayed on cutting-edge screens that bring these reflections to life.

With over 292 submissions, it was an honour to have my work selected to be showcased alongside such a talented group of artists. This recognition not only highlights the potential of real-time AI-driven art but also pushes forward the possibilities of performance art in digital spaces.

About the Open Call

The Self-Reflection Open Call by Transient Labs invited creators to submit artworks themed around introspection. This opportunity was tied to the launch of The Lab by Transient Labs, an innovative platform empowering creators with tools for dynamic art and custom contracts. The selected artworks were exhibited on September 2, 2024, in a format tailored for large-scale digital displays.

Creating Mirror, Mirror

The concept began as an experiment: could a magic mirror be both a canvas and a collaborator? Using real-time AI processes, the mirror responded to my prompts in the moment, crafting visual and narrative elements that felt alive. It was a constant interplay of control and surrender, allowing the AI to reflect back interpretations of my input.

Bonus: Exploring LoRA Training

I recently trained my first-ever LoRA (Low-Rank Adaptation) model using a dataset of my own face, thanks to a cloud-based solution: Kohya on RunDiffusion. The process was efficient, taking just two hours from setup to completion on a medium-sized server. Integrating the model into my ComfyUI workflow with SDXL Turbo allowed me to transform generic AI outputs into something personal and familiar. If you’re starting with LoRA, I highly recommend RunDiffusion’s tutorial for its helpful insights on folder structures, file paths, and configuration settings. This has been a game-changer for customizing AI-generated art – and yes, my cats are next!

Looking Ahead

This project helped me test out my ideas for incorporating AI into performance art and interactive installations. The collaboration between human intent and machine response has vast potential for storytelling, audience engagement, and artistic exploration.

To all who attended the exhibit or supported this project, thank you for joining me in this reflective journey. The future of art and tech is bright, and I’m excited to keep exploring it – one reflection at a time.

Posted: September 2, 2024 by David Oxley

Written by

David Oxley

You may also be interested in:

EXPOS3D: The Art of the System

Building Trevor Jones’ Digital Revolution The core of EXPOS3D was always clear: surveillance, data capture, and participation under observation. The exhibition invited visitors into a space where technology not only

Generative Mycology: Real-time AI Art with Local Power

In this latest stage of my RAPTURE journey, I’ve taken a significant step forward – moving from cloud-based workflows to fully local, real-time rendering powered by an RTX 5090 GPU

Algorithmic art - tactile prints

I recently saw some fantastic examples of 3D printing on fabric for the fashion industry, and reached out to see if my artwork, Apollonian Mandelbrot, could be realized in this way. I'm thrilled with the result.

Ode To a Haggis

My book, Ode To A Haggis, began as a fun project inspired by a series of AI-generated images. I started creating these pictures, imagining haggis as if they were real creatures roaming the Scottish Highlands. The images were a hit, and it wasn’t long before a friend asked if I could compile them into a book for her.

Island of Wonders

Island of Wonders is my contribution to the 'On An Island' collection, as part of 23ArtCollective, and it has found its home on objkt.com. This project is a mix of imagination, art, and storytelling, blending elements of wonder and curiosity into a unique digital collectible experience.Dante's Pixel Inferno

In a recent project, I had the incredible opportunity to collaborate with Trevor Jones, a renowned artist blending traditional and digital art forms. Together, we developed "Dante's Pixel Inferno", an exclusive CryptoAngels video game that brings his unique work to life in an interactive way.

NFTcc Rome

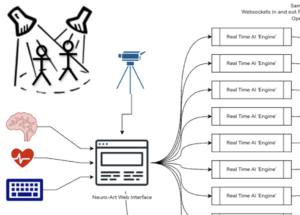

Neuro-Art with BrainSigns and Myndek at Videocittà Festival I had the privilege of showcasing my real-time Neuro-Art system at the Videocittà festival in Rome, a vibrant celebration of digital and

RAPTURE project

RAPTURE, short for Real-time Ai Performance Transformation and User-Responsive Experience, is an extension of my Neuro-Art system that emerged after the Naples NFTcc event. While the original system was driven