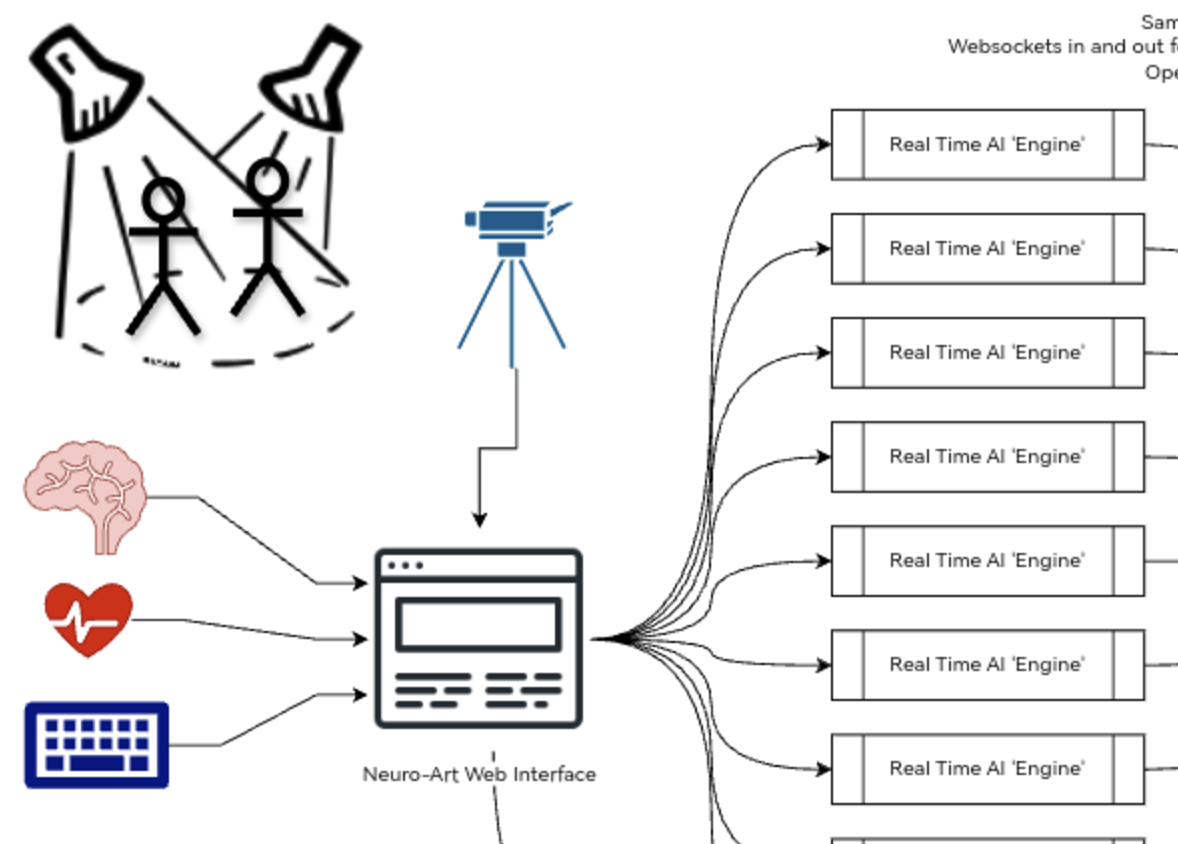

RAPTURE, short for Real-time Ai Performance Transformation and User-Responsive Experience, is an extension of my Neuro-Art system that emerged after the Naples NFTcc event. While the original system was driven by EEG inputs, I discovered during testing that it could also use live video input from a webcam, paired with prompts, to generate dynamic visuals in real time. While this was a promising start, it became clear that further refinement and exploration were needed to unlock its full potential.

The idea of RAPTURE gained traction after discussions with performer C0R4 during the Naples event. She shared green screen footage for testing, which allowed me to explore how the system could interpret human forms and movements in a performance context. While the early results were visually engaging, they highlighted the need for enhancements such as pose detection and finer control over how movements were translated into AI-generated visuals.

At its core, RAPTURE is designed to transform live performances, creating an immersive layer of visuals that evolve in response to real-time inputs. However, refining the system required addressing challenges like improving pose recognition and better aligning outputs with the dynamics of live performances.

Refining RAPTURE for Performances

Subsequent testing, focused on refining the engine with pose control technologies like OpenPose and ControlNet. I also experimented with adjustments to image size, compression ratios, and other parameters to make the system more responsive and visually cohesive. While these iterations significantly improved its functionality, there’s still much to explore in terms of responsiveness and interactivity. The use of cloud-based GPUs so far brings in latency and a risk of connectivity issues at venues. I understand that some people exploring real-time AI are managing to get framerates of up to 60fps (at lower resolutions though of 512×512) by using powerful local machines with an RTX 4090 GPU.

Looking Ahead

RAPTURE represents a promising direction for integrating AI into live performances, but it’s a work in progress. Future developments will focus on deeper exploration of pose control, improved handling of complex video inputs, and the integration of more advanced real-time transformation features. While not groundbreaking in its current form, RAPTURE has the potential to become a powerful tool for creating immersive, dynamic experiences in both performance and installation art contexts.

Special thanks to C0R4 and the NFTcc community for their input and collaboration in shaping this ongoing project. As RAPTURE evolves, I’m excited to see how it can enhance the interplay between technology, art, and performance.

Posted: June 10, 2024 by David Oxley

Written by

David Oxley

You may also be interested in:

EXPOS3D: The Art of the System

Building Trevor Jones’ Digital Revolution The core of EXPOS3D was always clear: surveillance, data capture, and participation under observation. The exhibition invited visitors into a space where technology not only

Generative Mycology: Real-time AI Art with Local Power

In this latest stage of my RAPTURE journey, I’ve taken a significant step forward – moving from cloud-based workflows to fully local, real-time rendering powered by an RTX 5090 GPU

Algorithmic art - tactile prints

I recently saw some fantastic examples of 3D printing on fabric for the fashion industry, and reached out to see if my artwork, Apollonian Mandelbrot, could be realized in this way. I'm thrilled with the result.

Ode To a Haggis

My book, Ode To A Haggis, began as a fun project inspired by a series of AI-generated images. I started creating these pictures, imagining haggis as if they were real creatures roaming the Scottish Highlands. The images were a hit, and it wasn’t long before a friend asked if I could compile them into a book for her.

Island of Wonders

Island of Wonders is my contribution to the 'On An Island' collection, as part of 23ArtCollective, and it has found its home on objkt.com. This project is a mix of imagination, art, and storytelling, blending elements of wonder and curiosity into a unique digital collectible experience.Dante's Pixel Inferno

In a recent project, I had the incredible opportunity to collaborate with Trevor Jones, a renowned artist blending traditional and digital art forms. Together, we developed "Dante's Pixel Inferno", an exclusive CryptoAngels video game that brings his unique work to life in an interactive way.

Mirror, mirror...

In the world of art and technology, reflections take on new dimensions – both literal and metaphorical. For my submission to the Transient Labs’ Open Call for the Self-Reflection exhibit,

NFTcc Rome

Neuro-Art with BrainSigns and Myndek at Videocittà Festival I had the privilege of showcasing my real-time Neuro-Art system at the Videocittà festival in Rome, a vibrant celebration of digital and